Using Kafka

To run Apache Kafka locally we will use three containers (Kafka, Zookeeper, Kafdrop) organized inside a file called “docker Compose”.

After starting the containers, wait a few minutes and use any Apache Kafka-compatible tool to connect using tcp port 29092. To make it easier to test in the development environment, this docker Compose file features the Kafdrop tool.

Follow the steps below to start using kafka:

- Clone the repository

git clone https://github.com/devprime/kafka - To start

docker-compose up -d - List the three active containers

docker ps - To finish

docker-compose down

Starting to Configure Topics in Kafka

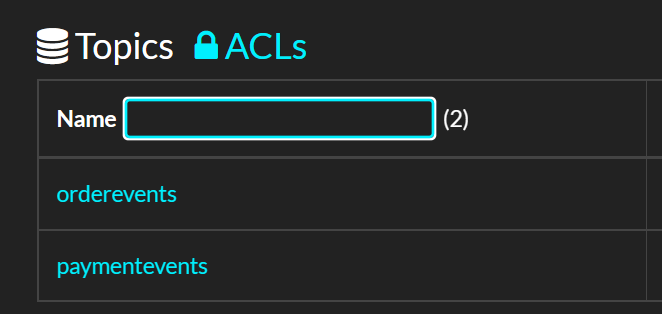

Devprime-based microservices automatically connect to Stream services such as Kafka, and in the examples we use some standard topics such as orderevents and paymentevents.

- Open Kafdrop in the browser at http://localhost:9000

- Go to the “Topic” option and New adding “orderevents” and then “paymentevents”

- Check the created topics

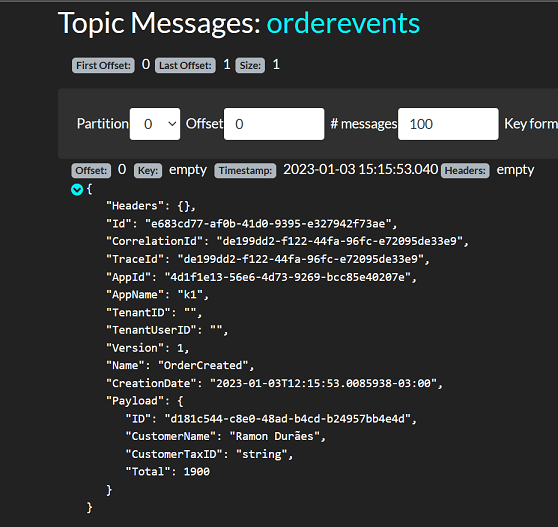

Viewing messages in Kafka

When using the microservice, we will send events through Kafka and view them through the Kafdrop tool, clicking on the topic and then on “View Messages”.